Get Your Sitemap Indexed Faster: Google’s Secret Weapon

페이지 정보

본문

Get Your Sitemap Indexed Faster: Google’s Secret Weapon

→ Link to Telegram bot

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Telegraph:

Imagine this: you’ve poured your heart and soul into crafting amazing content, meticulously optimizing it for search engines. Yet, your pages remain stubbornly hidden from Google’s prying eyes, languishing in the search results abyss. Frustrating, right? The culprit? Slow indexing.

But don’t despair! Understanding the root causes is the first step towards a swift resolution. Often, improving the speed at which your content is indexed comes down to simple tweaks and strategic adjustments. A quick fix for indexing efficiency might be as easy as implementing a sitemap or ensuring your robots.txt file isn’t inadvertently blocking crawlers.

Identifying the Bottlenecks

Several factors can hinder Google’s ability to crawl and index your website efficiently. One common issue is a poorly structured website architecture. A complex, confusing sitemap makes it difficult for search engine bots to navigate and discover all your pages. Think of it like a poorly designed maze – the bots might get lost and never find the exit (or your valuable content!).

Another frequent problem is server issues. A slow or overloaded server can prevent search engine crawlers from accessing your pages promptly. This is like trying to rush through a crowded hallway – it’s simply going to take longer. Regular server maintenance and optimization are crucial.

Technical Hurdles to Overcome

Technical SEO plays a vital role. Incorrectly configured robots.txt files can inadvertently block crawlers from accessing important pages. Similarly, issues with your XML sitemap, such as incorrect URLs or missing pages, can significantly impact indexing speed. Regularly reviewing and updating these elements is essential.

Finally, consider the frequency of your content updates. Google prioritizes fresh content, so regularly publishing new material signals to the search engine that your site is active and relevant, leading to more frequent crawls and faster indexing.

Speed Up Indexing Now

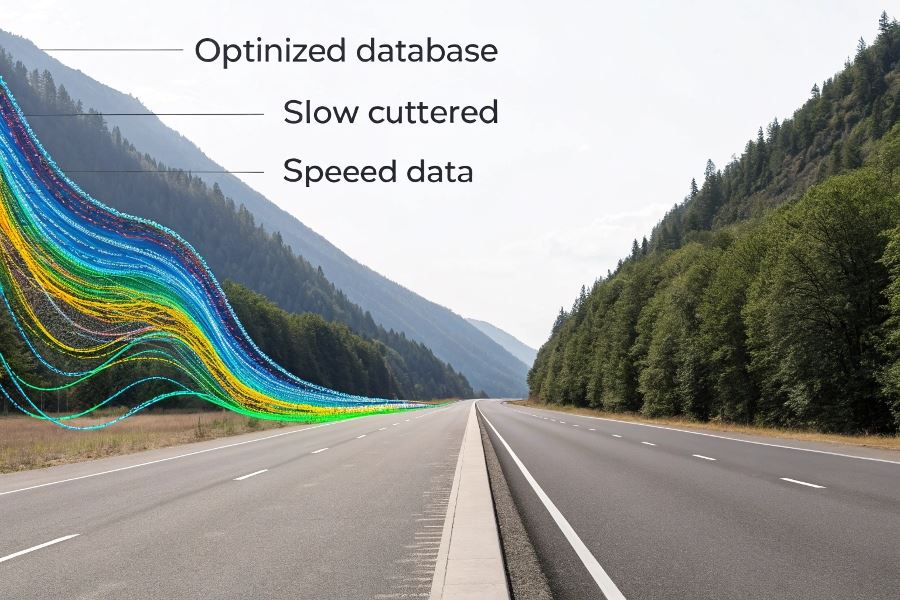

Facing slow indexing speeds? It’s a common SEO headache, impacting your website’s visibility and ultimately, your bottom line. Getting your pages indexed quickly is crucial for driving organic traffic, and sometimes, a swift intervention is all you need. A quick fix for indexing efficiency can often be achieved through targeted technical adjustments, rather than extensive overhauls. Let’s explore some key strategies to accelerate the process.

Sitemaps and the URL Inspection API

Submitting a comprehensive XML sitemap to Google Search Console is the first step in ensuring Googlebot knows where to look. Think of it as providing Google with a detailed map of your website, clearly outlining all the pages you want indexed. This isn’t just about submitting the sitemap; it’s about ensuring its accuracy and regularity. Regularly update your sitemap whenever you add new content or make significant structural changes. This keeps your sitemap fresh and relevant, ensuring Google is always aware of your latest offerings.

Beyond simply submitting the sitemap, leverage the power of Google’s URL Inspection API. This tool allows you to check the indexing status of individual URLs, identify any potential issues, and even request indexing for specific pages. For example, if you’ve just published a crucial blog post, you can use the API to request immediate indexing, ensuring it appears in search results as quickly as possible. This targeted approach is far more efficient than relying solely on Googlebot’s crawl schedule. Google Search Console

Robots.txt and Internal Linking

Your robots.txt file acts as a gatekeeper, controlling which parts of your website Googlebot can access. A poorly configured robots.txt file can inadvertently block important pages from being indexed, hindering your SEO efforts. Carefully review your robots.txt file to ensure you’re not accidentally blocking crucial content. Remember, it’s a powerful tool, but misuse can severely impact your indexing.

Equally important is your internal linking structure. Strategic internal linking helps Googlebot navigate your website efficiently, discovering and indexing new pages more quickly. Think of it as creating a network of interconnected pathways, guiding Googlebot through your site’s content. Ensure your internal links are relevant and contextually appropriate, naturally guiding users and search engines alike. Avoid excessive or unnatural linking practices, which can be detrimental to your SEO.

Diagnosing Issues with Google Search Console

Google Search Console is your ultimate diagnostic tool for indexing problems. It provides valuable insights into how Google sees your website, highlighting any indexing errors or crawl issues. Regularly monitor your Search Console data for warnings or errors related to indexing. This proactive approach allows you to address problems before they significantly impact your rankings. Pay close attention to the "Coverage" report, which provides a detailed overview of indexed, submitted, and blocked pages. Identifying and resolving these issues promptly is crucial for maintaining optimal indexing efficiency. Google Search Console

By implementing these three technical SEO tweaks, you can significantly improve your website’s indexing efficiency, leading to better search engine visibility and increased organic traffic. Remember, consistent monitoring and optimization are key to long-term success.

Speed Up Indexing Now

Search engine visibility is the lifeblood of any successful online business. But what happens when your meticulously crafted content remains stubbornly hidden from Google’s crawlers? The frustration is palpable, and the impact on your bottom line can be significant. This isn’t about slow and steady wins; we’re talking about a quick fix for indexing efficiency, a strategic shift that can dramatically improve your search ranking in a relatively short timeframe. Getting your content indexed swiftly is crucial for maximizing your ROI.

The key lies in understanding that search engines aren’t just looking for any content; they’re searching for high-quality, original content that provides genuine value to users. Think insightful blog posts, comprehensive guides, or engaging videos that answer specific user queries. Thin content, duplicate material, or content farms are quickly flagged and often ignored. Creating truly valuable content, however, signals to search engines that your site is a reliable source of information, leading to faster indexing and improved rankings. This approach isn’t about gaming the system; it’s about providing genuine value, which is what search engines ultimately reward.

Structure Your Content

Beyond content quality, content structure plays a vital role. Imagine a sprawling, disorganized house – difficult to navigate, right? Similarly, poorly structured content makes it hard for search engine crawlers to understand and index your pages effectively. Use clear headings (H1, H2, H3, etc.), concise paragraphs, and a logical flow to guide both users and search engine bots. Think of it as creating a roadmap for your content. This simple optimization can significantly improve your indexing speed.

Schema Markup Magic

Adding schema markup is like giving search engines a detailed map of your content. Schema markup uses structured data to tell search engines exactly what your content is about, improving the accuracy and speed of indexing. For example, using schema markup for recipes allows search engines to understand the ingredients, cooking time, and nutritional information, leading to richer search results and potentially higher click-through rates. Tools like Google’s Structured Data Testing Tool https://dzen.ru/a/aGLCtN1OlEqpK5bW can help you verify your implementation.

Backlinks: The Power of Authority

Finally, building high-authority backlinks is crucial. Backlinks from reputable websites act as votes of confidence, signaling to search engines that your content is valuable and trustworthy. Focus on earning backlinks from relevant and authoritative sources in your industry. Guest blogging on relevant sites, participating in industry forums, and creating high-quality content that naturally attracts links are all effective strategies. Remember, quality over quantity is key here. A few high-quality backlinks from trusted sources are far more valuable than many low-quality links.

Telegraph:Decoding the Speed of Link Indexing: Why 'Instant' is a Myth

- 이전글Get Your Pages Indexed Faster: Mastering Google’s Crawl Speed 25.07.05

- 다음글Race to the Top: Mastering Speedy Google Indexing 25.07.05

댓글목록

등록된 댓글이 없습니다.