Speedy Configuration of Google Indexing

페이지 정보

본문

Speedy Configuration of Google Indexing

→ Link to Telegram bot

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Telegraph:

Imagine your website, a treasure trove of valuable content, languishing in the digital shadows, unseen by potential customers. Getting your pages indexed quickly and reliably is crucial for online success. This isn’t just about getting found; it’s about ensuring your content reaches the right audience at the right time. How quickly and consistently search engines like Google can find and process your content directly impacts your visibility and overall SEO performance. The speed and reliability of indexing are paramount to your online success.

Technical SEO: The Foundation of Fast Indexing

A well-structured website is the cornerstone of efficient indexing. Crawlability, the ability of search engine bots to access your pages, is paramount. Broken links, incorrect robots.txt configurations, and excessive use of JavaScript can all hinder this process. Indexability refers to whether a page is suitable for inclusion in the search engine index. Pages with thin content or duplicate content are less likely to be indexed effectively. Finally, a logical site architecture, with clear internal linking, helps search engines navigate your website efficiently. Think of it as creating a well-lit, clearly-marked map for search engine bots to follow.

Content is King (and Queen of Indexing Speed)

High-quality, relevant content is not just about pleasing users; it’s about signaling to search engines that your website is a valuable resource. Fresh, original content that satisfies user search intent is more likely to be prioritized by search engine algorithms. Conversely, low-quality, thin content, or content that’s already widely available elsewhere, will likely be overlooked. Focus on creating valuable, engaging content that naturally incorporates relevant keywords.

Server Speed: The Unsung Hero

Even the best content and site architecture can be hampered by slow server performance. A slow-loading website frustrates users and signals to search engines that your site isn’t optimized. Reliable hosting and a Content Delivery Network (CDN) are crucial for ensuring fast loading times and a positive user experience, which, in turn, positively impacts indexing speed. Investing in a robust infrastructure is an investment in your website’s visibility.

Unlock Faster Indexing

Getting your content discovered quickly and reliably is crucial for online success. The faster search engines understand and index your website, the sooner you start seeing organic traffic. But achieving this requires a strategic approach that goes beyond simply creating great content. Speed and reliability in indexing aren’t just desirable; they’re essential for maximizing your SEO efforts and reaching your target audience. Let’s explore how to optimize your site for rapid and dependable indexing.

Schema Markup Magic

Search engines rely on context to understand your content. Schema markup acts as a translator, providing structured data that clarifies the meaning of your pages. By using schema, you’re essentially giving search engines a detailed roadmap of your website’s content, enabling them to quickly grasp the key information and index it accordingly. For example, using the Product schema type on an e-commerce product page will clearly define the product’s name, description, price, and availability, leading to richer snippets in search results and potentially improved click-through rates. Implementing schema is relatively straightforward, with tools like Google’s Structured Data Testing Tool* https://t.me/indexingservis* available to help you validate your markup. Properly implemented schema can significantly improve your site’s crawlability and indexing speed.

Guiding Crawlers Efficiently

XML sitemaps and robots.txt are fundamental tools for managing how search engine crawlers interact with your website. An XML sitemap provides a comprehensive list of your website’s URLs, acting as a guide for crawlers to efficiently discover and index your pages. Meanwhile, robots.txt allows you to control which parts of your website should be indexed and which should be excluded. Using these tools strategically can dramatically improve the speed and reliability of your indexing process. For instance, a well-structured XML sitemap can help crawlers prioritize important pages, ensuring they’re indexed quickly. Similarly, a carefully crafted robots.txt file can prevent crawlers from wasting time on irrelevant or duplicate content. Remember to regularly update your sitemap as you add new content.

Internal Linking Power

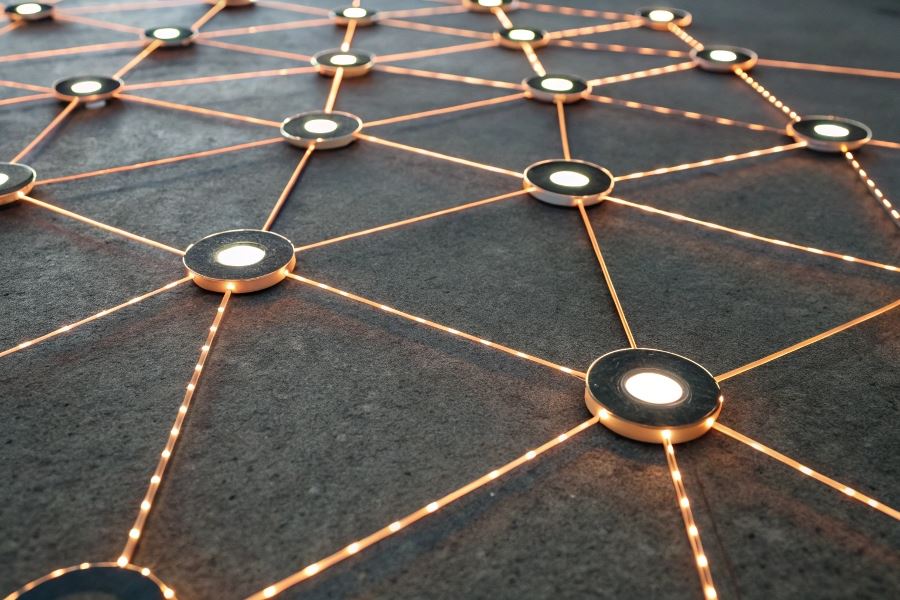

Internal linking is often overlooked, but it’s a powerful tool for optimizing indexing. By strategically linking relevant pages within your website, you create a network of interconnected content that helps search engine crawlers navigate your site more efficiently. This improves the discoverability of your pages, leading to faster and more reliable indexing. Think of it as creating a clear path for crawlers to follow, ensuring they don’t miss any crucial pages. For example, linking from your homepage to your most important blog posts helps distribute link equity and signals to search engines the importance of those pages. A well-planned internal linking strategy is a cornerstone of a robust SEO strategy. Ensure your internal links are relevant and use descriptive anchor text.

By implementing these strategies, you can significantly improve how quickly and reliably your website is indexed, ultimately boosting your organic search visibility and driving more traffic to your site. Remember, consistent optimization is key. Regularly review and refine your approach to ensure your website remains easily accessible and understandable to search engine crawlers.

Unlocking Search Visibility: Mastering Indexing

Let’s face it: a slow, unreliable indexing process is a silent killer of organic traffic. You could have the most brilliantly crafted content, perfectly optimized for your target keywords, but if Google’s bots aren’t crawling and indexing your pages efficiently, your visibility suffers. Speed and reliability in indexing directly impact your search engine rankings and overall online presence. Getting this right is crucial for maximizing your return on investment in content creation and SEO efforts.

This isn’t about theoretical SEO; it’s about practical strategies to diagnose and fix indexing problems. The first step is understanding what’s happening behind the scenes. Google Search Console is your indispensable ally here. By meticulously analyzing your GSC data, you can pinpoint specific pages experiencing indexing delays or outright failures. Are there patterns emerging? Are certain types of content being overlooked? Are there server errors consistently reported? Identifying these issues is the foundation for effective remediation. For example, a sudden drop in indexed pages might signal a recent sitemap issue or a change in your robots.txt file. Analyzing this data helps you proactively address these issues before they significantly impact your rankings.

Diagnose Indexing Problems

Google Search Console provides a wealth of information. Look beyond the high-level overview. Dive into the individual page reports to understand the specific indexing status of each URL. Pay close attention to any error messages or warnings. Are there crawl errors? Are pages marked as "noindex"? Are there issues with canonicalization? Addressing these granular details is key to improving your overall indexing performance. Remember, Google Search Console https://t.me/indexingservisabout is your primary tool for this analysis.

Track Your Crawl Rate

Monitoring your crawl rate is equally important. A crawl rate that’s too slow means Google isn’t visiting your pages frequently enough, potentially delaying the indexing process. Conversely, an excessively high crawl rate can overload your server, leading to crawl errors and negatively impacting your site’s performance. Finding the sweet spot is crucial. Google Search Console provides insights into your crawl stats, allowing you to identify potential bottlenecks. If you notice a significant drop in your crawl rate, investigate potential server issues or robots.txt configurations.

Optimize Indexing Strategies

Once you’ve identified areas for improvement, it’s time to experiment. A/B testing different indexing strategies can reveal what works best for your specific website. For example, you could test different sitemap submission frequencies or experiment with different robots.txt directives. You might even test the impact of improving your website’s overall speed and performance. By carefully tracking the results of these tests, you can refine your approach and optimize for faster, more reliable indexing. Remember to isolate variables in your A/B tests to ensure accurate results. This iterative process of testing and refinement is essential for continuous improvement.

Telegraph:Racing to the Top: Unlocking Speedy Google Indexing

- 이전글8 Tips To Up Your Buy A Motorcycle License Game 25.07.13

- 다음글Speedy Google Indexing Overview 25.07.13

댓글목록

등록된 댓글이 없습니다.