Unlock Your SEO Potential: Mastering Link Indexing

페이지 정보

본문

Unlock Your SEO Potential: Mastering Link Indexing

→ Link to Telegram bot

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Telegraph:

Want to see your website climb the search engine results pages (SERPs)? It’s not just about great content; it’s about ensuring search engines can find that great content. This means understanding the crucial role of indexing.

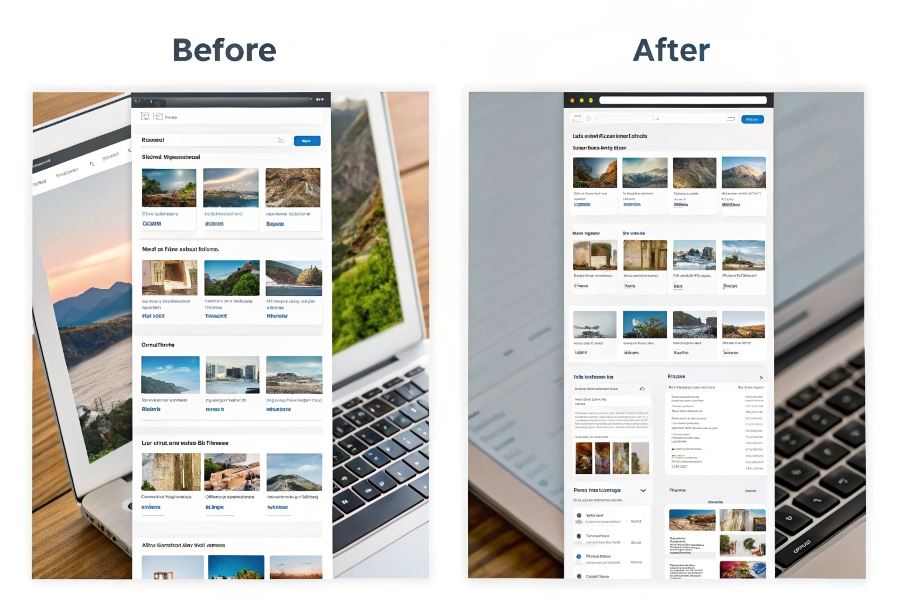

Getting your pages indexed efficiently is key to improving your website’s visibility. Boost rankings with indexing strategy by focusing on how search engines discover and process your content. This involves optimizing your website’s technical aspects and understanding how search engine crawlers work.

Crawl Budget: Your Website’s Digital Allowance

Search engines like Google have a limited amount of resources to crawl and index websites. This is known as your crawl budget. Think of it as a finite number of visits a search engine bot can make to your site within a given timeframe. A large, complex website with poor internal linking might quickly exhaust its crawl budget, leaving many pages unindexed. Optimizing your crawl budget involves improving your site architecture, using XML sitemaps, and ensuring efficient internal linking. This helps guide crawlers to your most important pages, maximizing your indexing potential.

Technical SEO: Removing Indexing Roadblocks

Technical SEO issues can significantly hinder indexing. Broken links, slow loading speeds, and duplicate content all confuse search engine crawlers, preventing them from properly indexing your pages. Regularly auditing your website for these issues is crucial. Tools like Google Search Console can help identify and address these problems. For example, fixing broken links improves the user experience and signals to search engines that your site is well-maintained, leading to better indexing. Addressing these technical hurdles ensures that search engines can efficiently crawl and index your content, ultimately improving your search rankings.

Mastering Search Engine Visibility

Let’s face it: getting your website noticed in the vast expanse of the internet is a challenge. You’ve poured your heart and soul into creating compelling content, but if search engines can’t find it, all that effort is wasted. This isn’t about simply creating content; it’s about ensuring search engines understand and appreciate what you’ve built. Boost rankings with indexing strategy is the key, and it starts with a deep understanding of how search engines crawl and index your website.

Taming the Crawl: XML Sitemaps and robots.txt

Search engines rely on crawlers—automated programs that scour the web, following links to discover new pages. But how do you guide these crawlers to ensure they find all your important content? This is where XML sitemaps and robots.txt come into play. An XML sitemap acts as a roadmap, providing search engines with a comprehensive list of your website’s URLs, including their last modification date and priority. This helps crawlers prioritize which pages to index first. Conversely, robots.txt is your control panel, allowing you to specify which parts of your website should be excluded from crawling. For example, you might want to block crawlers from accessing staging areas or duplicate content. Using both effectively ensures search engines focus on your most valuable pages.

Schema Markup: Speaking the Search Engine Language

Search engines aren’t just looking at your text; they’re trying to understand the meaning behind it. Schema markup is a way to provide this context, using structured data to tell search engines exactly what your content is about. Imagine a recipe page: schema markup can specify the ingredients, cooking time, and nutritional information. This allows search engines to display rich snippets in search results, making your listing more visually appealing and informative. For example, adding schema markup for local businesses can help your business appear in Google Maps results, increasing visibility and driving local traffic. Implementing schema markup is a crucial step in improving indexability and enhancing your search engine rankings. Tools like Google’s Structured Data Testing Tool https://dzen.ru/a/aGLCtN1OlEqpK5bW can help you validate your implementation.

Content Optimization: Relevance and Search Intent

Even with perfect crawl management and schema markup, your content needs to be relevant and address user search intent. This means understanding what keywords people use when searching for information related to your business and crafting content that directly answers their questions. Think about the user’s journey: what problem are they trying to solve? What information are they seeking? By aligning your content with search intent, you significantly increase the chances of your pages ranking highly for relevant keywords. Keyword research tools like SEMrush https://dzen.ru/psichoz can help you identify high-value keywords and understand search volume and competition. Remember, high-quality, relevant content is the foundation of any successful SEO strategy. It’s not just about stuffing keywords; it’s about providing genuine value to your audience.

Ultimately, achieving high search engine rankings requires a multifaceted approach. By strategically implementing these indexing best practices, you can significantly improve your website’s visibility and attract more organic traffic. It’s about speaking the language of search engines and providing the information they need to understand and value your content.

Unlocking Ranking Potential

Let’s face it: getting your website to the top of search results is a constant battle. You’ve optimized your content, built high-quality backlinks, and maybe even dabbled in some schema markup. But are you truly maximizing the potential of your indexing strategy? Often, the difference between a steady climb and a frustrating plateau lies in the meticulous tracking and refinement of how search engines see your site. Improving your search engine rankings requires a deep understanding of how search engines crawl and index your content. Boost rankings with indexing strategy is not just about creating great content; it’s about ensuring search engines can find and understand it.

Monitoring Your Progress

Google Search Console is your best friend here. It provides invaluable data on how Googlebot is crawling and indexing your pages. Pay close attention to crawl errors, index coverage reports, and any warnings or messages. Are there pages Googlebot can’t access? Are there issues with your sitemap? Addressing these promptly is crucial. Beyond Google Search Console, consider using other tools to gain a more holistic view. For example, analyzing your robots.txt file with a dedicated validator can help identify potential indexing roadblocks. Remember, a well-structured sitemap submitted to Google Search Console is the foundation of effective indexing.

Analyzing Results

Simply getting indexed isn’t enough; you need to see results. Tracking keyword rankings and organic traffic is essential. Tools like SEMrush [https://dzen.ru/psichoz] and Ahrefs [https://medium.com/@indexspeedy] offer comprehensive ranking tracking capabilities. Look for trends. Are specific keywords performing well? Are there any unexpected drops in rankings? Correlate these changes with any modifications you’ve made to your indexing strategy. For instance, if you recently implemented a new sitemap, did it lead to an increase in indexed pages and a corresponding improvement in organic traffic for relevant keywords?

Iterative Refinement

This isn’t a set-it-and-forget-it process. Analyzing your data should inform continuous improvement. Let’s say you notice a significant drop in rankings for a particular keyword after a site redesign. This might indicate an issue with internal linking, or perhaps the page’s content is no longer relevant to the search query. The key is to identify the cause, adjust your strategy (perhaps by improving internal linking or updating the content), and then monitor the results again. This iterative approach, driven by data and a willingness to adapt, is the key to sustained ranking improvements. Think of it as a continuous feedback loop: monitor, analyze, refine, repeat. This process, when applied consistently, will significantly improve your search engine rankings over time.

Telegraph:Get Your Pages Indexed Faster: Mastering Google’s Crawl and Indexation

- 이전글호두코믹스 링크 - 호두코믹스 먹튀 - 호두코믹스 우회접속 - 호두코믹스 우회 접속 하는 방법 - ghenzhalrtm 25.07.06

- 다음글딸친구 최신주소エ 직시 (1080p_26k)딸친구 최신주소エ #hvu 25.07.06

댓글목록

등록된 댓글이 없습니다.