Website Indexing: A Complete Guide for 2025

페이지 정보

본문

Website Indexing: A Complete Guide for 2025

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Tired of wrestling with your Large Language Models (LLMs) and their limited access to your valuable data? Imagine a world where your LLMs could effortlessly tap into your private documents, internal wikis, and even your personal notes. That’s the power of LlamaIndex.

LlamaIndex is a powerful framework designed to connect your LLMs to external data sources. It acts as a bridge, allowing your language model to seamlessly access and process information residing outside its immediate knowledge base. This opens up a world of possibilities, enabling more sophisticated and contextually relevant responses. Think of it as giving your LLM superpowers, allowing it to access and process information from a vast range of sources.

Connecting Your Data: The Foundation of LlamaIndex

LlamaIndex’s core functionality revolves around three key features: data connection, querying, and index structure. First, it offers robust connectors for various data sources, including PDFs, CSV files, and even databases. This allows you to easily ingest your data without complex preprocessing. Once connected, LlamaIndex intelligently structures this data into various indexes, optimizing it for efficient querying by your LLM.

Querying and Index Structures: Getting the Right Answers

The querying process is intuitive and efficient. You simply ask your question, and LlamaIndex intelligently retrieves and processes the relevant information from the chosen index. Different index structures, such as vector indexes or keyword indexes, cater to various data types and query needs, ensuring optimal performance. For example, a vector index is ideal for semantic search, while a keyword index is better suited for exact-match queries.

LlamaIndex vs. Other Frameworks: A Quick Comparison

While other LLM frameworks exist, LlamaIndex distinguishes itself through its ease of use and focus on data connection. Many alternatives require significant coding expertise or lack the versatility to handle diverse data sources. LlamaIndex simplifies the process, making it accessible to a broader range of users.

| Framework | Ease of Use | Data Source Variety | Querying Capabilities |

|---|---|---|---|

| LlamaIndex | High | High | Excellent |

| LangChain | Medium | Medium | Good |

| Other Frameworks | Low | Low | Varies |

LlamaIndex empowers you to unlock the full potential of your LLMs by providing a simple yet powerful way to connect them to your data. This allows for more informed, accurate, and contextually relevant responses, transforming how you interact with your LLMs.

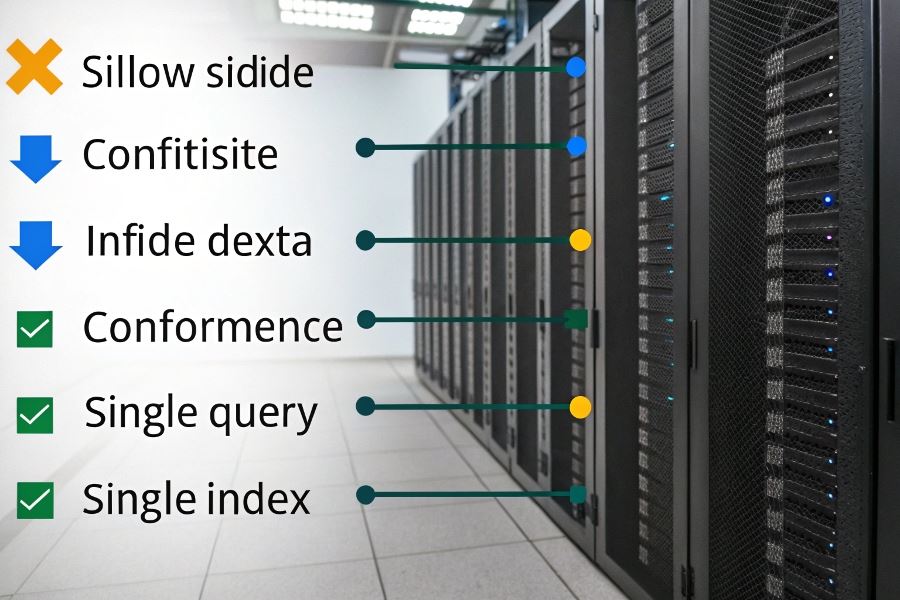

LlamaIndex Indexing Methods

Unlocking the power of LlamaIndex hinges on understanding its diverse indexing methods. Choosing the right approach is crucial for efficient data retrieval and seamless interaction with your data. This isn’t just about storing information; it’s about optimizing access to it. LlamaIndex, a data framework designed to connect your LLM to external data, offers several ways to structure your knowledge base, each with its own strengths and weaknesses. The process of choosing the right method depends heavily on the type of data you’re working with and the kinds of questions you anticipate asking.

LlamaIndex allows you to connect your large language models (LLMs) to your own data. This means you can query your personal documents, PDFs, or even a private database, all without needing to manually input everything into the LLM’s prompt. The key to this functionality lies in its indexing methods.

Vector Indexes: Embracing Similarity

Vector indexes are ideal for semantic search. They represent data points as vectors in a high-dimensional space, allowing for the retrieval of information based on semantic similarity rather than exact keyword matches. This is particularly useful when dealing with unstructured data like text documents or codebases. Imagine searching for information about "the impact of climate change on coastal communities"—a vector index would return relevant documents even if they don’t contain that exact phrase, but discuss related concepts like sea-level rise or erosion. Tools like Pinecone and Weaviate are often integrated with LlamaIndex for this purpose.

List Indexes: Simple and Effective

List indexes provide a straightforward approach, particularly suitable for structured data or when you need a simple, ordered sequence of information. They are less computationally intensive than vector indexes, making them a good choice for situations where speed and simplicity are prioritized over nuanced semantic search. For example, a list index might be perfect for a chronologically ordered list of events or a sequence of instructions.

Tree Indexes: Navigating Hierarchies

Tree indexes excel when dealing with hierarchical data. Think of a file system, an organizational chart, or a knowledge base structured as a tree. They allow for efficient navigation and retrieval of information based on its position within the hierarchy. This structure is particularly useful for applications requiring precise location-based retrieval or traversing complex relationships between data points.

Choosing the Right Index

Selecting the optimal index structure depends on several factors. Consider the type of data: structured data (like databases) might benefit from list indexes, while unstructured data (like documents) might be better suited to vector indexes. The nature of your queries is also crucial. If you need semantic search capabilities, a vector index is essential. If you need fast retrieval of specific items in a known order, a list index might suffice. Finally, the size of your dataset and the available computational resources will influence your choice. Larger datasets might require more sophisticated indexing techniques, while resource constraints might necessitate simpler approaches.

LlamaIndex in Action

LlamaIndex finds applications across various domains. In research, it can help researchers quickly access and synthesize information from vast collections of papers. In customer service, it can empower chatbots to provide more accurate and context-aware responses by accessing relevant knowledge bases. In education, it can facilitate personalized learning experiences by tailoring information to individual student needs. The possibilities are vast, limited only by your imagination and the data you choose to connect. By carefully selecting the appropriate indexing method, you can unlock the full potential of LlamaIndex and transform how you interact with your data.

LlamaIndex Unleashed

Unlocking the power of your data has never been easier. Imagine effortlessly querying your entire personal document library, a sprawling research paper collection, or even a vast internal knowledge base – all with the simplicity of a natural language prompt. This is the promise of LlamaIndex, a powerful framework that transforms unstructured data into readily accessible information. LlamaIndex essentially acts as a bridge, connecting your data sources to large language models (LLMs) like those from OpenAI or Hugging Face. It allows you to build powerful applications that can answer complex questions based on your own unique data.

Building Your First App

Let’s dive straight into building a simple LlamaIndex application. We’ll use a small collection of text files as our data source. First, you’ll need to install the LlamaIndex Python package. Then, you’ll load your data using one of LlamaIndex’s data connectors, such as the SimpleDirectoryReader. This reader allows you to easily ingest data from a directory containing your text files. Next, you’ll create an index, choosing from various indexing strategies offered by LlamaIndex, such as VectorStoreIndex or ListIndex. The choice depends on your data and query needs. Finally, you’ll query your index using natural language. LlamaIndex will handle the retrieval and processing, returning a concise and relevant answer.

from llama_index import SimpleDirectoryReader, VectorStoreIndex, LLMPredictor, PromptHelperfrom langchain.chat_models import ChatOpenAI# Load your datadocuments = SimpleDirectoryReader('./data').load_data()# Initialize LLM and prompt helperllm_predictor = LLMPredictor(llm=ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo"))max_input_size = 4096num_output = 256prompt_helper = PromptHelper(max_input_size, num_output, 2)# Create indexindex = VectorStoreIndex.from_documents(documents, llm_predictor=llm_predictor, prompt_helper=prompt_helper)# Query the indexquery_engine = index.as_query_engine()response = query_engine.query("What is the main topic of these documents?")print(response)This simple example demonstrates the core functionality. Remember to replace './data' with the actual path to your data directory and ensure you have the necessary API keys configured for your chosen LLM. You can find detailed instructions and further examples in the LlamaIndex documentation.

Troubleshooting and Optimization

Implementing LlamaIndex might present some challenges. One common issue is managing the size of your data. Very large datasets can lead to performance bottlenecks. LlamaIndex offers several strategies to mitigate this, including chunking your documents into smaller, manageable pieces and employing efficient indexing techniques. Another potential hurdle is choosing the right LLM and prompt engineering. Experimentation is key here. Start with a smaller, well-defined dataset and gradually increase complexity. Proper prompt engineering can significantly improve the accuracy and relevance of your query responses. Remember to monitor your LLM usage and costs, especially when dealing with large datasets or complex queries.

Advanced Techniques

For enhanced performance and scalability, consider exploring LlamaIndex’s advanced features. Techniques like query refinement, where you iteratively refine your queries based on initial results, can significantly improve accuracy. You can also leverage different embedding models to optimize the performance of your vector store. Furthermore, exploring different index structures, such as graph indexes, can be beneficial for certain types of data and queries. Consider using techniques like caching to speed up repeated queries. Finally, for extremely large datasets, consider distributed indexing strategies to parallelize the processing and improve scalability. LlamaIndex provides a flexible framework that allows you to adapt and optimize your solution to meet your specific needs.

Telegraph:Get Your Site Indexed by Google in 2025

- 이전글Get Your Site Indexed on Google: A 2025 Guide 25.06.15

- 다음글Google Indexing: A Complete Guide 2025 25.06.15

댓글목록

등록된 댓글이 없습니다.