instant indexing for blogger

페이지 정보

본문

instant indexing for blogger

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Ever wonder how Google (or Bing, or DuckDuckGo) finds your website? It’s not magic; it’s a sophisticated process of discovery and organization. Understanding this process is key to improving your site’s search engine optimization (SEO). The process of making your website discoverable to search engines involves a complex interplay of factors, and understanding how search engines work is crucial for improving your website’s visibility.

Search engines employ automated programs called crawlers, or bots, to explore the World Wide Web. These tireless digital explorers navigate the internet, following links from page to page, much like a diligent librarian cataloging books. They discover new web pages, assessing their content and relevance. This process of discovery is fundamental to how indexing is done; without it, your website wouldn’t even be considered for inclusion in search results.

Crawlers: The Web’s Explorers

Googlebot, Bingbot, and other crawlers systematically follow links embedded within websites. If a page links to your website, the crawler is likely to follow that link and visit your page. This link-following behavior is a crucial part of how the web is mapped and indexed. Think of it as a vast, interconnected network of pages, with crawlers acting as the explorers charting its territories.

Crawl Frequency: A Balancing Act

The frequency with which a crawler visits your website depends on several factors. High-quality content, regular updates, and a strong backlink profile (other websites linking to yours) all contribute to more frequent crawls. Conversely, sites with low-quality content or infrequent updates may be crawled less often. Search engines prioritize crawling websites they deem valuable and relevant to user searches.

Prioritizing the Important Pages

Search engines don’t just crawl; they prioritize. They use sophisticated algorithms to determine which pages are most important and relevant. Factors like the quality of your content, site architecture, and internal linking structure all play a role in this prioritization. A well-structured website with clear internal links makes it easier for crawlers to understand your site’s content and prioritize the most important pages.

Unpacking the Web: How Search Engines Understand Your Content

Understanding how search engines index websites is crucial for effective SEO. It’s not simply about robots crawling pages; it’s about a sophisticated process of data extraction and interpretation. The question, how is indexing done, delves into the complexities of how search engines transform raw web data into searchable information. This involves a multi-stage process that goes far beyond simply reading text.

Content Processing: Beyond the Obvious

Search engines don’t just see words on a page; they dissect the entire structure. Consider a blog post with embedded videos, images, and interactive elements. A search engine’s crawler must first identify and process each element individually. Text is extracted, analyzed for keywords and context, and its relevance to the overall page topic is assessed. Images are examined, not just for their file type (JPEG, PNG, GIF, etc.), but also for their alt text, which provides crucial descriptive information for search engines that can’t "see" images directly. Metadata, including title tags, meta descriptions, and header tags, provides further context and signals the page’s main topic. This meticulous process ensures that all aspects of the content contribute to the search engine’s understanding.

File Types and Formats: A Diverse Landscape

The web is a diverse ecosystem of file types. Search engines must handle everything from simple HTML pages to complex PDFs, videos, and audio files. Each file type presents unique challenges. For example, a PDF requires specialized software to extract text, while a video might be analyzed for its captions or transcripts. The ability to handle these diverse formats effectively is a key differentiator among search engines. They employ various techniques, including Optical Character Recognition (OCR) for scanned documents and sophisticated algorithms for analyzing multimedia content. This ensures that even non-textual content is indexed and can be found through relevant searches.

Context and Meaning: The Intelligence Behind the Index

Extracting data is only half the battle. Search engines must then understand the context and meaning of that data. This involves sophisticated natural language processing (NLP) techniques. NLP allows search engines to understand the relationships between words, phrases, and sentences, going beyond simple keyword matching. For instance, understanding synonyms, identifying entities (people, places, organizations), and recognizing the sentiment expressed in the text are all crucial aspects of this process. This contextual understanding is what allows search engines to deliver highly relevant results, even when the exact search query isn’t explicitly present on the page. Consider the difference between a page about "apple pie" and a page about the technology company "Apple." The context is crucial for accurate indexing and retrieval. This is where the true intelligence of search engine indexing lies. The ability to discern meaning from complex data is what separates a simple keyword index from a powerful search engine.

Search Engine Secrets: Unveiling the Indexing Process

Imagine the internet: a sprawling, ever-changing landscape of billions of web pages. How do search engines like Google manage to sift through this colossal amount of information and deliver relevant results in a fraction of a second? The answer lies in a complex process called indexing. Understanding how is indexing done is key to understanding how search engines work. It’s not just about finding pages; it’s about organizing and prioritizing them for optimal retrieval.

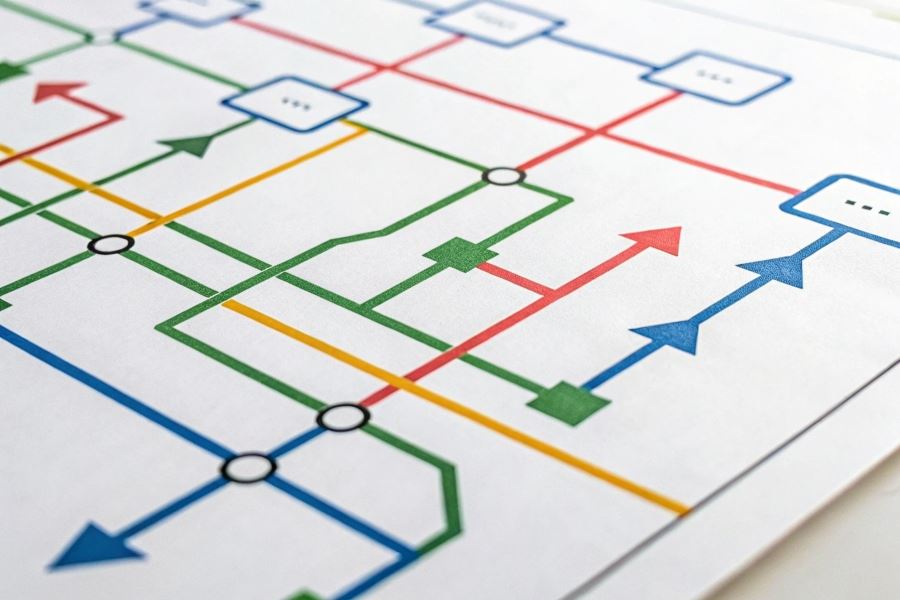

This intricate process begins with crawlers, sophisticated bots that traverse the web, following links and discovering new content. These crawlers don’t just passively read; they analyze the page’s structure, content, and metadata, extracting key information like keywords, headings, and links. This extracted data is then sent to the search engine’s massive databases. Think of these databases as highly organized libraries, meticulously cataloging every piece of information gleaned from the web. The organization isn’t random; it’s strategically designed for lightning-fast retrieval.

Database Organization

How this data is organized and stored is a closely guarded secret, but we know it involves sophisticated techniques like inverted indexing. This method essentially creates an index that maps keywords to the documents containing them, allowing for incredibly efficient searches. Imagine searching for "best hiking boots." Instead of scanning every document on the web, the search engine can instantly access a list of pages containing that specific phrase, significantly speeding up the process. This is further enhanced by techniques like sharding and replication, distributing the data across multiple servers to handle the immense volume of information and ensure high availability.

Algorithm Power

Once the data is indexed, the real magic begins. Search engine algorithms, complex mathematical formulas, come into play. These algorithms analyze various factors—keyword relevance, page authority, user behavior, and more—to determine the ranking of search results. A page’s position in the search results is not simply based on the presence of keywords; it’s a nuanced calculation considering hundreds of signals. For example, a page with high-quality content, backlinks from reputable websites, and a positive user experience will generally rank higher than a page with low-quality content and few backlinks. Google’s PageRank algorithm, though constantly evolving, remains a cornerstone of this ranking process.

Adapting to Change

The web is dynamic; content is constantly being updated, created, and deleted. Search engines must adapt to these changes. This involves continuous crawling and re-indexing. Crawlers regularly revisit websites to check for updates, ensuring the search results reflect the latest information. This process is not instantaneous; there’s a delay between an update and its reflection in search results. Factors like website structure, update frequency, and the overall authority of the website influence how quickly these changes are indexed. Understanding this dynamic process is crucial for optimizing a website for search engines. Tools like Google Search Console https://t.me/SpeedyIndex2024/ can provide valuable insights into how Google sees your website and helps you monitor indexing status.

Telegraph:Best Website Indexing Software 2025|Top Tools & SEO

- 이전글The Double-Edged Gamble 25.06.17

- 다음글instant indexing blogger 25.06.17

댓글목록

등록된 댓글이 없습니다.