Indexing Heads: Types, Selection & Maintenance

페이지 정보

본문

Indexing Heads: Types, Selection & Maintenance

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Ever wonder how Google finds your website? It’s not magic, but it’s pretty close. Understanding this process is crucial for online success. Getting your site indexed correctly means more visibility, more traffic, and ultimately, more business.

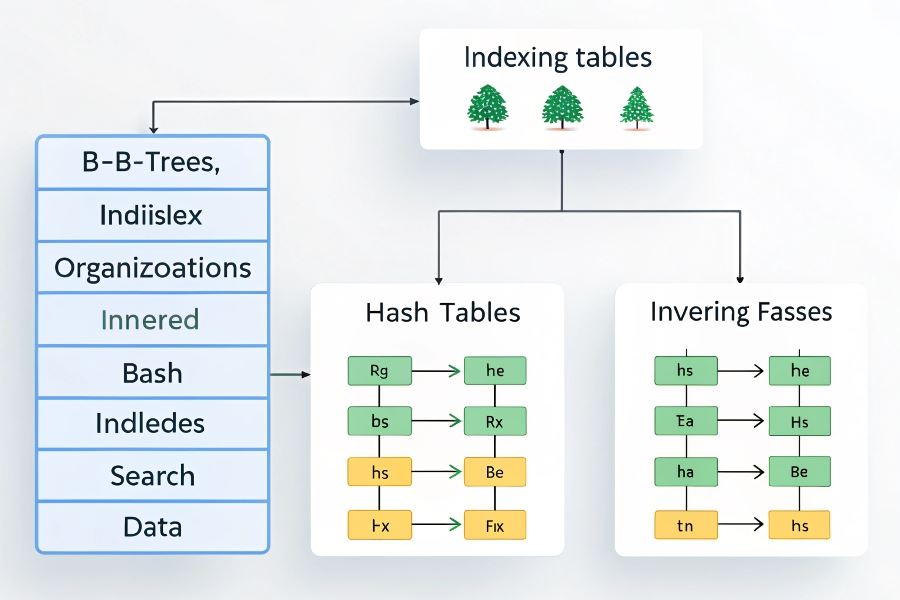

Google uses a sophisticated system of web crawlers, known as Googlebot, to discover and index web pages. These bots constantly scour the internet, following links and analyzing content. This process, where Googlebot discovers, fetches, processes, and stores information about your web pages, is vital for your website’s ranking. Managing this process effectively, and troubleshooting any issues, is where Google Search Console comes in. Using the tools within Google Search Console, you can monitor your website’s indexing status, identify and fix any problems that prevent Google from seeing your content, and ultimately improve your search engine visibility.

Understanding the Crawl and Index

Googlebot crawls your website by following links, both internal and external. Think of it as a diligent librarian meticulously cataloging every book (web page) in the library (the internet). Once a page is crawled, its content is analyzed, and relevant information is added to Google’s index – a massive database of web pages. This index is what Google uses to deliver search results.

Common Indexing Problems and Their Solutions

Sometimes, things go wrong. A common issue is a website’s robots.txt file incorrectly blocking Googlebot from accessing important pages. Another frequent problem is broken links or a sitemap that isn’t submitted correctly. These issues can prevent Google from properly indexing your content. The solution? Regularly check your robots.txt file, ensure your sitemap is up-to-date and submitted to Google Search Console, and fix any broken links promptly. Google Search Console provides detailed reports to help you identify and resolve these problems.

| Problem | Solution |

|---|---|

Incorrect robots.txt | Review and correct your robots.txt file to allow Googlebot access. |

| Broken Links | Regularly check for and fix broken links using tools like Google Search Console. |

| Missing or Incorrect Sitemap | Submit a valid and up-to-date sitemap to Google Search Console. |

By understanding the indexing process and utilizing Google Search Console, you can significantly improve your website’s visibility and reach a wider audience.

Mastering Website Indexing

Getting your website noticed by Google is crucial for online success. But simply building a great site isn’t enough; you need to ensure search engines can effectively crawl and index your content. This often involves more than just creating high-quality pages. Understanding how search engines discover and process your website’s information is key, and that’s where a well-structured approach to site architecture and technical SEO comes into play. Successfully managing this process directly impacts your site’s visibility in search results. Monitoring your site’s performance through Google Search Console helps you understand how Google views your website and identify any indexing issues.

XML Sitemaps: Your Indexing Roadmap

Think of an XML sitemap as a detailed map of your website, guiding search engine crawlers to all your important pages. It’s a structured file that lists all the URLs you want Google to index, along with metadata like last modification date and priority. This helps Google understand the structure of your website and prioritize which pages to crawl first. A well-formed sitemap significantly improves the efficiency of the indexing process, ensuring Google doesn’t miss any crucial content. For example, a large e-commerce site with thousands of products would greatly benefit from a comprehensive sitemap, ensuring all product pages are discovered and indexed. Submitting your sitemap to Google Search Console https://www.google.com/webmasters/tools/ allows Googlebot to quickly access and process this information.

Robots.txt: Controlling the Crawlers

While sitemaps tell Google what to index, robots.txt dictates how Google crawls your site. This simple text file allows you to control which parts of your website are accessible to search engine crawlers. You can use it to block access to specific directories, pages, or even entire sections of your site. This is particularly useful for preventing indexing of sensitive information, duplicate content, or pages under development. For instance, you might block access to your staging environment or internal administrative areas. Carefully managing your robots.txt file is essential to avoid accidentally blocking important content and hindering your search engine optimization efforts. Remember, incorrectly configured robots.txt can negatively impact your Google Search Console indexing reports.

Structured Data: Speaking Google’s Language

Structured data markup uses schema.org vocabulary to provide search engines with additional context about your content. By embedding this code into your website’s HTML, you’re essentially giving Google a clearer understanding of what your pages are about. This improves not only indexing but also search visibility, enabling richer snippets in search results (like star ratings or product details). For example, adding structured data to your product pages can significantly improve click-through rates by making your listings more appealing and informative. Implementing structured data is a powerful way to enhance your website’s performance in Google’s search results and improve your overall search engine optimization strategy. Using tools like Google’s Rich Results Test https://speedyindex.substack.com can help you validate your structured data implementation. Proper implementation ensures that Google can accurately understand and index your content, leading to better search rankings and increased visibility.

Uncover Indexing Issues

Ever feel like your website is shouting into the void, despite your best SEO efforts? You’ve crafted compelling content, optimized your images, and built a robust sitemap—yet your rankings remain stubbornly stagnant. The culprit might be lurking in plain sight: indexing problems. Understanding how Google crawls and indexes your pages is crucial for success, and that’s where leveraging the power of Google Search Console comes in. Proper use of Google Search Console allows you to monitor your site’s performance and identify any issues preventing Google from properly indexing your content.

Let’s dive into the detective work. Analyzing Google Search Console data is the first step. Think of it as your website’s health report. The platform provides a wealth of information, from crawl errors to index coverage. Pay close attention to the "Coverage" report. This section highlights pages Google has indexed, those it couldn’t access, and those marked as submitted but not indexed. A high number of errors here suggests a significant problem. For example, a large number of 404 errors indicates broken links that need immediate attention. Identifying these errors is only half the battle; resolving them is where the real work begins.

Fixing Broken Links

Broken links are like potholes on your website’s highway—they disrupt the user experience and confuse search engines. Use Google Search Console’s data to pinpoint these problematic links. Once identified, you have two main options: redirect the broken link to a relevant page using a 301 redirect or remove the link altogether. A 301 redirect preserves the link equity, ensuring that the value associated with the old URL is passed to the new one. Removing the link is necessary if the content no longer exists or is irrelevant. Tools like Screaming Frog can help you automate the process of finding and fixing broken links at scale. Screaming Frog

Site Architecture Improvements

Beyond broken links, your website’s architecture plays a vital role in indexing. A poorly structured site can make it difficult for Googlebot to crawl and index all your pages. Ensure your site has a clear and logical hierarchy, with internal links connecting related pages. This helps Google understand the relationship between different parts of your website and improves the overall crawlability. Consider using a sitemap to guide Googlebot through your website’s structure. Submitting your sitemap to Google Search Console ensures Google is aware of all your pages, increasing the likelihood of them being indexed. Google Search Console

Monitoring Progress

Fixing indexing issues isn’t a one-time event; it’s an ongoing process. Regularly monitor your Google Search Console data to track your progress. Look for improvements in the "Coverage" report, and pay attention to any new errors that might emerge. This continuous monitoring allows you to make necessary adjustments and ensure your website remains optimally indexed. By proactively addressing indexing issues, you’ll improve your website’s visibility in search results and ultimately drive more organic traffic. Remember, consistent monitoring and optimization are key to long-term SEO success.

Telegraph:Htaccess Options Indexes|Secure Your Website

- 이전글Commvault Indexing: Optimize Backup & Recovery 25.06.13

- 다음글15 Startling Facts About Robot Vacuum Cleaner Quiet You've Never Known 25.06.13

댓글목록

등록된 댓글이 없습니다.