Unlocking SEO Success: The Power of a Dependable Link Indexer

페이지 정보

본문

Unlocking SEO Success: The Power of a Dependable Link Indexer

→ Link to Telegram bot

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Telegraph:

Imagine your website as a bustling city, and search engine bots are the delivery drivers. They need efficient routes to deliver your content to the right addresses (search results). Getting your pages indexed quickly is crucial for visibility and ranking. Achieving this requires understanding how search engines work and optimizing your site for their crawlers. Getting your content indexed quickly is key to achieving fast and easy link indexing, leading to improved search engine visibility.

Understanding Crawl Budget

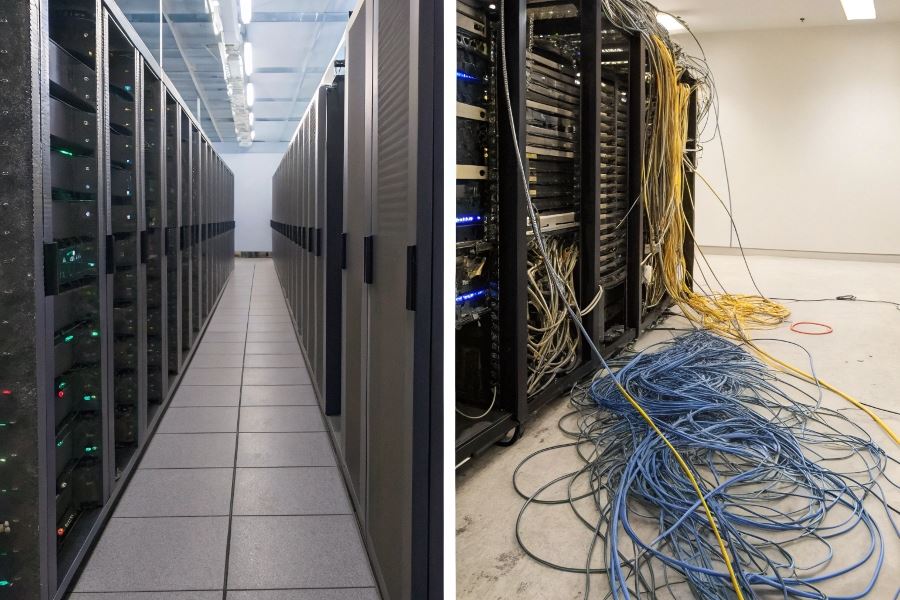

Every website has a limited "crawl budget"—the number of pages a search engine bot can crawl within a given timeframe. A large website with poor site architecture might have its budget spread too thin, resulting in some pages being overlooked. Conversely, a well-structured site with clear internal linking guides bots efficiently, maximizing the impact of your crawl budget. Think of it like this: a well-organized warehouse allows for faster and more efficient picking of orders, while a cluttered one slows everything down.

Identifying and Addressing Technical SEO Issues

Technical SEO issues can significantly impede indexing. Broken links, duplicate content, and slow page load speeds all frustrate search engine bots. For example, a website with numerous 404 errors signals to search engines that content is missing or inaccessible, reducing the likelihood of indexing. Similarly, duplicate content confuses search engines, making it difficult to determine which version to index. Regularly auditing your website for these issues using tools like Google Search Console is crucial. Addressing these problems ensures search engines can efficiently crawl and index your valuable content, ultimately improving your search engine rankings.

Speed Up Indexing

Getting your content indexed quickly is crucial for SEO success. A slow crawl can mean lost traffic and missed opportunities. But what if you could dramatically improve your search engine visibility without resorting to black-hat techniques? The key lies in understanding how search engine crawlers work and strategically optimizing your website to facilitate their process. Maximize efficiency with fast and easy link indexing by implementing a few key strategies.

Sitemap Mastery

Submitting a well-structured XML sitemap to Google Search Console and Bing Webmaster Tools is the first step. Think of it as giving search engine bots a detailed roadmap of your website. This ensures they can efficiently discover and index all your important pages, especially new or updated content. A poorly structured or incomplete sitemap, however, can lead to pages being missed entirely. Ensure your sitemap is up-to-date and accurately reflects your website’s structure. Regularly check your Search Console for crawl errors and address any issues promptly. This proactive approach will significantly improve your chances of rapid indexing.

Robots.txt Optimization

While sitemaps tell search engines what to crawl, robots.txt dictates how they crawl. This file, located at the root of your website, provides instructions to crawlers, specifying which parts of your site should be indexed and which should be ignored. A poorly configured robots.txt can inadvertently block important pages from being indexed, hindering your SEO efforts. Carefully review your robots.txt file to ensure it doesn’t accidentally block access to crucial content. Tools like Screaming Frog SEO Spider can help you analyze your robots.txt and identify potential issues.

Internal Linking Power

Internal linking is often underestimated, but it’s a powerful tool for accelerating indexation. By strategically linking relevant pages within your website, you’re essentially guiding search engine crawlers through your content. This creates a clear path for them to follow, making it easier to discover and index all your pages. Focus on creating a logical and intuitive internal linking structure that reflects the natural flow of information on your website. Avoid excessive or irrelevant linking, which can be detrimental to your SEO. Think of it as creating a well-organized library, where each book (page) is easily accessible from related sections.

Structured Data’s Advantage

Structured data markup, using schema.org vocabulary, helps search engines understand the content on your pages more effectively. By providing context and clarity, you’re essentially giving search engines a clearer picture of what your content is about. This can lead to faster indexing and improved search results visibility. Implementing structured data is relatively straightforward, and there are numerous tools and resources available to assist you. For example, you can use Google’s Structured Data Testing Tool to validate your markup and ensure it’s correctly implemented. The added benefit is improved click-through rates in search results thanks to rich snippets.

By implementing these strategies, you can significantly improve your website’s crawl rate and ensure your content is indexed quickly and efficiently. Remember, consistent monitoring and optimization are key to maintaining optimal search engine visibility.

Track Your Indexing Success

Getting your content indexed quickly is crucial for SEO success. But simply submitting a sitemap isn’t enough; you need a robust strategy to ensure Google and other search engines crawl and index your pages efficiently. This means understanding how to accelerate the process and, critically, how to measure that acceleration. The goal is to quickly get your content discovered, and to achieve this, maximizing efficiency with fast and easy link indexing is paramount. This isn’t just about speed; it’s about understanding why some links index faster than others and using that knowledge to refine your approach.

Google Search Console Insights

Google Search Console (https://dzen.ru/psichoz) is your first port of call. Don’t just passively check it; actively use its data to understand indexing trends. Look beyond the overall number of indexed pages. Analyze the coverage report to identify any issues hindering indexing, such as crawl errors or indexing errors. Pay close attention to the "indexed, not submitted in sitemap" section – this often reveals opportunities to improve your internal linking structure. For example, if you notice a significant number of pages indexed despite not being explicitly listed in your sitemap, it suggests your internal linking is working well, driving organic discovery. Conversely, a large number of pages not indexed despite being in your sitemap might indicate issues with your site’s architecture or technical SEO.

Monitoring Indexing Speed

While Google Search Console provides a high-level overview, you need more granular data to truly understand indexing speed. Tools like Screaming Frog https://dzen.ru/psichoz can help. By crawling your website, you can identify pages that are taking longer to index and pinpoint potential bottlenecks. Combine this with Google Search Console data to correlate crawl errors with indexing delays. For instance, if you see a high number of 404 errors in Screaming Frog and a corresponding drop in indexed pages in Search Console, you know where to focus your efforts. Remember, consistent monitoring is key. Regular crawls will help you identify emerging issues before they significantly impact your indexing performance.

Data-Driven Refinement

The real power lies in using this data to improve your process. Let’s say you discover a significant delay in indexing for pages within a specific section of your website. This could indicate a problem with internal linking within that section, a lack of high-quality backlinks pointing to those pages, or even a technical issue preventing search engine crawlers from accessing them. By analyzing the data from both Google Search Console and tools like Screaming Frog, you can pinpoint the root cause and implement targeted solutions. This might involve improving your site’s architecture, enhancing your internal linking strategy, or addressing technical SEO issues. Continuous monitoring and iterative refinement are essential for maximizing indexing efficiency. This iterative process allows you to optimize your approach over time, ensuring your content is consistently indexed quickly and efficiently.

Telegraph:Google Fast Index|SEO Optimization Guide

- 이전글Bad Credit Truck Loans - Perfect Monetary Support For Dream Truck 25.07.04

- 다음글Speed Up Your SEO: Mastering Link Indexing Efficiency 25.07.04

댓글목록

등록된 댓글이 없습니다.